As the field of artificial intelligence continues to evolve at breakneck speed, things that seem impossible one day can become reality the next. Fantasy is overtaken by reality. That’s also what happened when I started writing this story. As I was talking to experts about whether and in what timeframe computers would be able to write scientific papers on their own, OpenAI’s new text generator, ChatGPT, suddenly appeared.

The texts that ChatGPT generates may not hold up to scientific scrutiny just yet, but they do mark a serious step forward in the development of computer-generated text.

There are still plenty of issues and teething problems when it comes to automatically generated content

Like millions of other people around the world, I tried out ChatGPT for myself, entering the following prompt: Write a scientific essay on the significance of DNA for career choices.

Within seconds, I was presented with an understandable, coherent text. It starts with an introductory paragraph on the increased importance of DNA in a variety of areas, including career choices, before presenting a number of examples.

Haroon Sheikh: ‘Creative thinking is fundamentally different from just analysing large amounts of data’

This is where things get problematic: the program completely ignores any ethical objections one might have regarding this subject: ‘DNA analysis has been used to identify the best candidates for certain positions, such as medical doctors or scientists. By analyzing the DNA of potential candidates, employers can determine which individuals have the best genetic makeup for the job’, ChatGPT observes, somewhat blithely. Sure, it’s a coherent text that sticks to the subject, probably by combing various internet sources related to DNA and career choices. But the world isn’t any better off for it, nor is it any wiser. You do miss the ethical reflection. The text is basically just a patchwork of known facts – with no sense of morality, wisdom or insight.

No creative contribution

“AI holds up a mirror to us”, says Haroon Sheikh, endowed professor of Strategic Governance of Global Technologies and senior research fellow at the Scientific Council for Government Policy. “As we make scientists’ work more and more standardised and measurable, it becomes easier to replicate it using computers. That’s why we’re now able to produce something that looks like a standard scientific article, but that’s not a real, substantive and creative contribution to science, although of course it is very difficult to define exactly what constitutes creativity.”

He compares it to plant-based meat. “People will say, ‘It tastes almost the same as chicken nuggets’, forgetting that those chicken nuggets are also heavily processed, to the point that they hardly resemble real meat anymore.”

Sheikh thinks it unlikely that computers will actually replace scientists any time soon. “Science is about coming up with new theories, which requires creative thinking. And that’s fundamentally different from just analysing large amounts of data. We still don’t really know how people come up with new ideas. So there would have to be a big leap forward before computers are able to do that.”

Training the computer-systems

Other experts are more optimistic about the potential of AI. While there are still a lot of practical limitations, it’s theoretically possible that a computer could generate a hypothesis based on data. “An argument is really just a pattern between different data points. It’s not that difficult”, says Lauren Waardenburg. A researcher at VU Amsterdam until recently, she now works at the University of Lille, specialising in how organisations deal with computer systems.

Lauren Waardenburg: ‘An argument is really just a pattern between different data points. It’s not that difficult’

“Computers are actually really good at generating hypotheses”, argues Frank van Harmelen, professor of Knowledge Representation and Reasoning at VU Amsterdam. “It’s just that they still generate a lot of bad hypotheses as well. For now, we still need humans to separate the good hypotheses from the bad, but I don’t see why you couldn’t train computer systems to do that – and to do it better – in the future.”

Indeed, Van Harmelen is already working on a program like that. Together with social psychologists from VU Amsterdam, his group developed software that, based on a database of 2,500 completed experiments, comes up with its own suggestions for new experiments. “A lot of them are still unusable, but we’re looking for ways to improve that”, he says.

Human fickleness

Will we even need theories at all in the future, or will analysis of raw data be enough to predict developments in the world? In 2008, Chris Anderson published an article titled ‘The End of Theory’ in computer magazine Wired, making that exact argument – that scientific theories would become obsolete in the near future, as they are often based on poor descriptions of reality, and they only hold true under certain circumstances. Why not just look at the data alone and run it through an algorithm? Why not treat the entire world as a single database?

But the problem lies precisely in that assumption – that there is such a thing as a single database. A good database needs unambiguous data. And unfortunately, humans are a lot less consistent when entering data than computers. “It’s terrible”, says Van Harmelen. “There is not a single human gene that only appears under one name in major scientific databases like PubMed. And different genes will sometimes have the same name.” As with the names of genes, a variety of other concepts are also described using inconsistent terminology by scientists around the world.

Frank van Harmelen: ‘We still need humans to separate the good hypotheses from the bad’

But what about the analysis of images? With scans of tumours, for example, human fickleness is less of a factor. But here too inconsistency abounds. “Different scans of the same tumours in the same parts of the body still show geographical differences”, says Waardenburg. “American scans are different from European ones. We don’t know why that is, exactly. It could be that they were made at a different time of day or using a different brand of scanning equipment.”

Waardenburg researches how organisations deal with the possibilities offered by computers in practice. She sees how much goes wrong on a daily basis because of little things that cause systems not to be used optimally, or that make it so that data can’t be exchanged. “Learning how to use a computer system effectively requires a lot of extra work, especially in the beginning”, she says. “Many organisations struggle to get their systems up and running properly.”

In addition, there are all kinds of different approaches to data classification between organisations and disciplines for historical reasons. This is also true within science. “In practice, multidisciplinary data sharing turns out to be incredibly difficult because of those variations”, Waardenburg notes. As a result, there’s a world of difference between what is technically possible and what is feasible in practice.

Hallucinating AI-systems

The most optimistic expert I talked to was Piek Vossen, professor of Computational Lexicology at VU Amsterdam. Vossen thinks that in ten years’ time, we’ll be well on our way towards producing computer-generated scientific papers. “Last year, one of my students handed in an essay that started with four computer-generated sentences. If she hadn’t explained that they were computer-generated, I wouldn’t have known”, he says.

Piek Vossen: ‘The AI completes sentences based on things it frequently encounters in other texts’

Vossen does stress that such a text has not been consciously given meaning: the AI completes sentences based on things it frequently encounters in other texts. It can easily choose from a number of options to complete a sentence like ‘The cat walks along…’: the edge of the roof, the windowsill, the balcony. “But in doing so, the AI has no idea what a cat represents, or what the verb ‘to walk’ means”, Vossen explains. “So a computer-generated article will always need to be reviewed by a human. But I definitely think AI could help generate the first draft of an article based on data and keywords.”

There are still plenty of issues and teething problems when it comes to automatically generated content. AI systems regularly ‘hallucinate’, for instance, making up information on topics they know too little about. Computer programs also draw conclusions based on existing data, which often contains biases – for example that all scientists are male, white, American and work at Stanford.

The algorithm used by Amazon a few years ago to select the best applicants for programmer jobs still serves as a painful example of this problem. The algorithm placed female candidates at the bottom of its rankings, not because they did not have the right qualifications, but because the AI’s decision-making process was informed by Amazon’s existing workforce at the time, which included very few female programmers. There was a similar incident at our own university, where a student of colour was not recognised as human by anti-cheating software on multiple occasions.

Self-driving cars

AI programs draw conclusions based on past situations – conclusions that sometimes make no sense and that can even be immoral. But humans aren’t always great at logical reasoning either, Van Harmelen argues. “People are too quick to see causal connections. Think of astrology, for instance, and all the other superstitions people have.” In his research on hybrid intelligence, he therefore tries to combine the best characteristics of humans and computers. “We’re going to try to build a scientific assistant in the next few years”, he says. “It won’t be able to replace scientists, but it will be helpful at different stages of the scientific process: collecting relevant literature, formulating a hypothesis, setting up and conducting experiments, collecting data and finally, perhaps, writing the first draft of an article.”

But how long will it take for that to become a reality? All four experts mention self-driving cars. “Ten years ago, we thought we’d have self-driving cars in two years”, says Sheikh. “And now we still think it will take two more years. In a controlled environment, self-driving cars perform fine, but things get more complex when a duck suddenly crosses the road.”

AI can only present us with imitations – some stunning, and others just plain embarrassing

Welcome to the real world, computer, where data is entered messily and ducks cross the road. Where data from the past can’t necessarily be used as a norm for the future. And where people have been struck by creative flights of fancy for centuries, without us knowing exactly where they come from. Logic and data will get you a long way, but for now you can only present us with imitations – some stunning, and others just plain embarrassing.

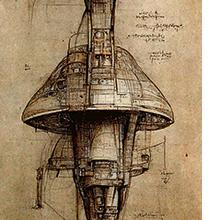

The images in this article were generated by artificial image generators. Graphic designer Rob Bömer tried out several. Most images are generated by Nightcafe.